Moving to a new ERP system or upgrading within an existing Oracle environment is a high-stakes initiative. Between legacy data, business-critical configurations, and compliance requirements, the success of a migration hinges on a single factor: data integrity.

Too often, data is treated as a one-time lift-and-shift exercise, resulting in inconsistent reports, broken workflows, and downstream errors that take weeks (or months) to unwind. Ensuring clean, validated, and traceable data throughout every phase of migration before, during, and after is what separates seamless transitions from disruptive ones.

Why Data Integrity Is the Critical Migration Risk

Even in the most well-planned Oracle migration projects, data is a persistent source of complexity. Here’s why:

- Legacy system inconsistencies: Source systems may contain outdated, duplicated, or orphaned data. Without proper cleansing, this gets inherited into the target system.

- Multiple data formats: Transactions, customer records, supplier records, and chart of accounts data may follow different conventions or structures across business units.

- Business rule misalignment: Data fields may not translate cleanly between Oracle EBS and Oracle Cloud modules without transformation logic or rule mapping.

- Time constraints: Tight go-live windows often leave little room for comprehensive data validation or iterative testing cycles.

For finance and IT leaders, the bottom line is clear: flawed data equals flawed reporting, broken controls, and potential audit exposure. Every effort to automate, optimize, or modernize ERP systems is undermined if the data foundation isn’t trusted.

Pre-Migration: Laying the Groundwork with Cleansing and Mapping

Strong data governance starts well before the first script is run. During the planning and assessment phase, the focus should be on establishing a clean, standardized, and clearly mapped dataset.

Key activities include:

- Data profiling and quality assessment: Identify anomalies, duplicates, null fields, and format inconsistencies across the source system.

- Business rule alignment: Engage finance and operations to validate how data should be mapped or transformed based on process logic—not just field matching.

- Retention policies: Archive obsolete or inactive records, such as old supplier accounts or inactive GL codes, to reduce clutter and improve performance.

- Data enrichment: Where applicable, supplement existing data with missing fields or updated values to align with Cloud-native requirements.

Migration Execution: Validating Data at Every Step

When the actual data migration begins—whether via ETL tools, integration scripts, or manual extracts—there must be controls in place to track data quality throughout the process.

Critical controls to implement:

- Pre-load validation reports: Use automated scripts to compare source and staging data for consistency and completeness before load.

- Trial conversions: Perform test runs of high-risk datasets (e.g., open payables, journal entries, supplier bank details) to catch mapping issues early.

- Reconciliation dashboards: Create real-time reports that flag mismatches, missing values, or transformation failures between source and target environments.

- Version-controlled scripts: All transformation logic, mapping sheets, and scripts should be tracked in a centralized repository with approval workflows.

This is where automation becomes invaluable, especially for repeatable validation across large datasets. oAppsNET frequently deploys regression testing scripts and data audit tools to reduce human error and ensure traceability.

Post-Migration: Sustaining Confidence with Auditability

Once the migration is live, the focus shifts from validation to assurance: the transferred data must continue to perform reliably in business operations and audit scenarios.

Post-migration best practices include:

- Reconciliation of balances: Confirm trial balances, subledger totals, open items, and cumulative GL activity match between systems.

- Audit trails: Maintain detailed logs of data transformations, approvals, and script changes for compliance or forensic review.

- End-user validation: Functional users (not just IT) should confirm data behaves as expected in live processes—such as AP approvals, journal posting, or expense reporting.

- Monitoring tools: Deploy ongoing data health monitoring to flag unexpected changes, duplicate entries, or compliance risks in real-time.

Without these steps, teams risk discovering integrity issues long after the migration team has moved on, when the damage is harder to contain.

How oAppsNET Supports Clean, Confident Oracle Migrations

oAppsNET understands that ERP transformation projects live or die by the quality of their data.

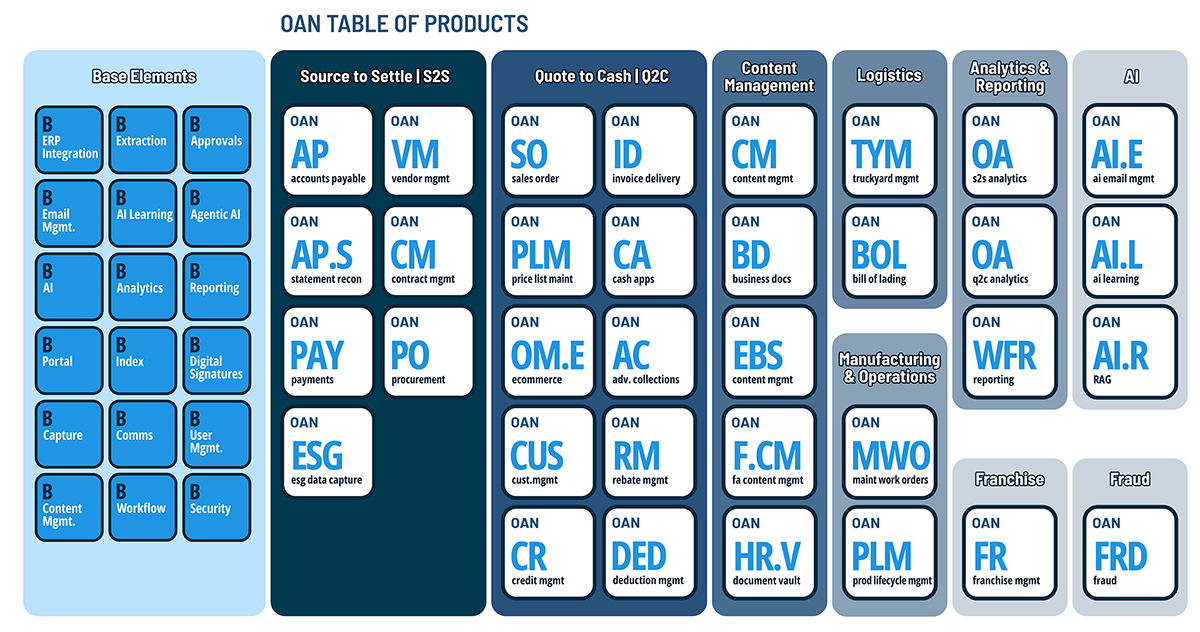

Whether you’re moving from Oracle EBS to Oracle Cloud, consolidating environments, or performing an upgrade, our migration support services are anchored in:

- Automated data testing and reconciliation tools

- Oracle-native validation scripts and integration know-how

- Experience with Tricentis and other regression testing platforms

- Governance frameworks that include functional SMEs and business leads—not just technical teams

We tailor our approach to your migration strategy—cloud-first, hybrid, or incremental—and help ensure your financial data is not only moved but also trusted.

ERP migrations are complex by nature. But data integrity doesn’t have to be a gamble. By embedding automated validation, cross-functional testing, and structured reconciliation into your migration plan, you de-risk transformation without sacrificing speed.

If you’re planning a move, or already mid-flight, let oAppsNET help you get it right the first time.